Welcome to the Avi Portal...█

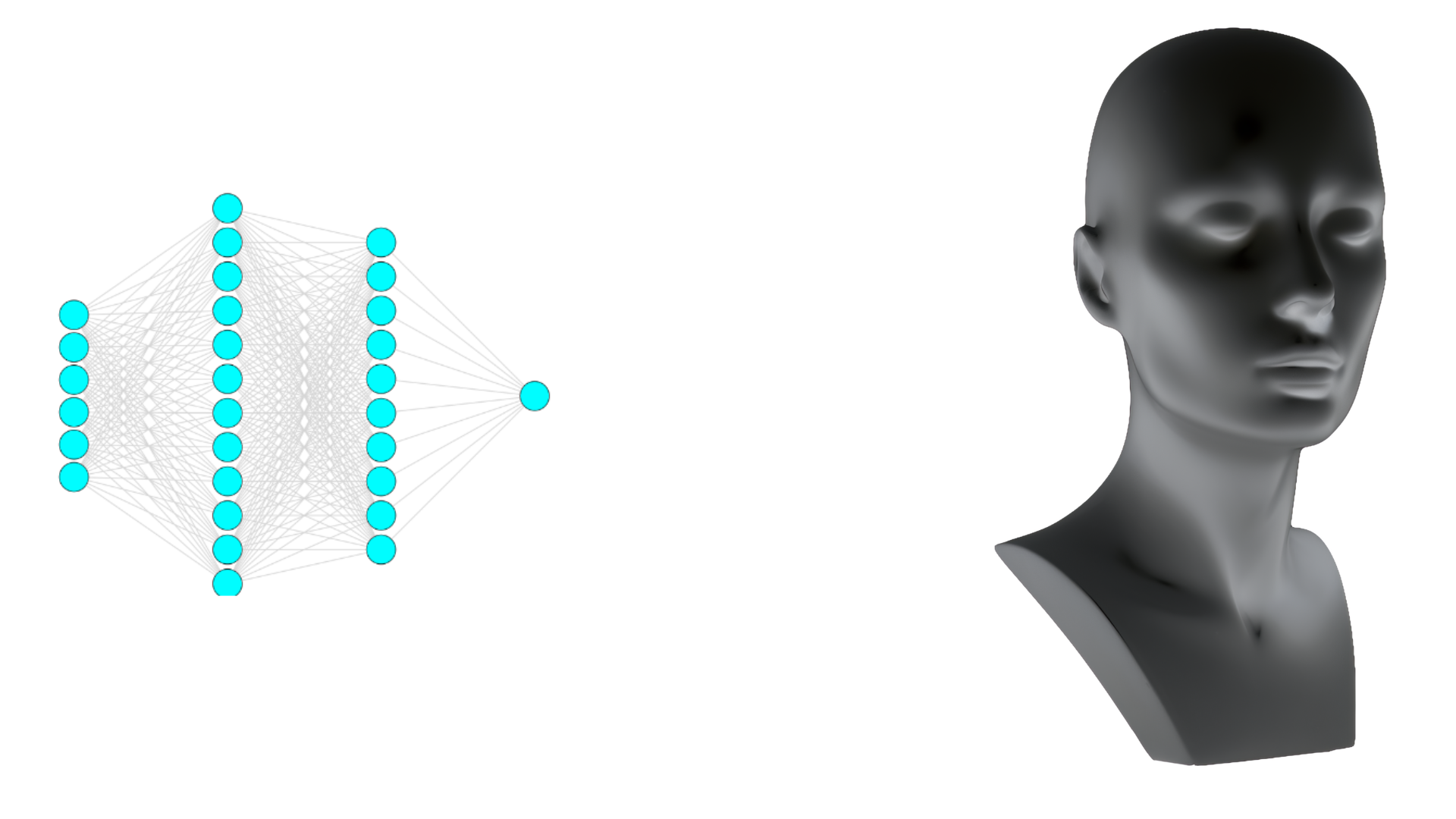

Greetings, traveler of the digital realms. I am Avi Amalanshu of CMU (MSML), former dual-degree (5-year B.Tech + M.Tech) at the ECE department, IIT Kharagpur. My vision is that one day you and I can train and infer from reasonable AI agents aligned to our own values without bearing the cost to throw half the internet on a bazillion GPUs for a whole year.

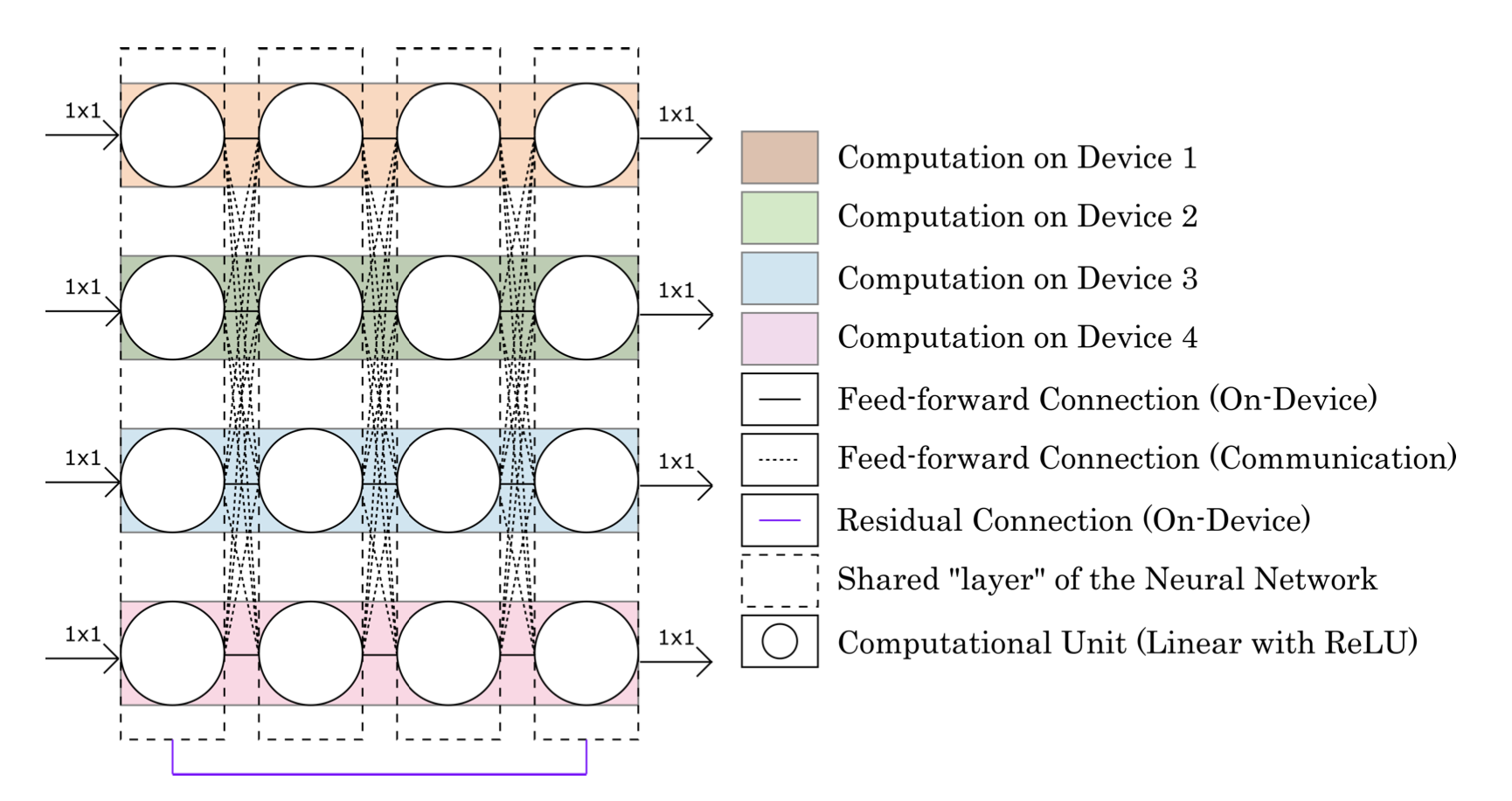

Towards that, I'm exploring the theory of representation learning. My broad hypothesis is that domain expertise should inform structural priors, not knowledge priors; given that, we might aim to uncover latent aleatoric structure. Finetuning to learn epistemic behavior within that framework is, then, akin to theories behind generalizable and efficient human learning from the linguists' universal grammar school of thought.

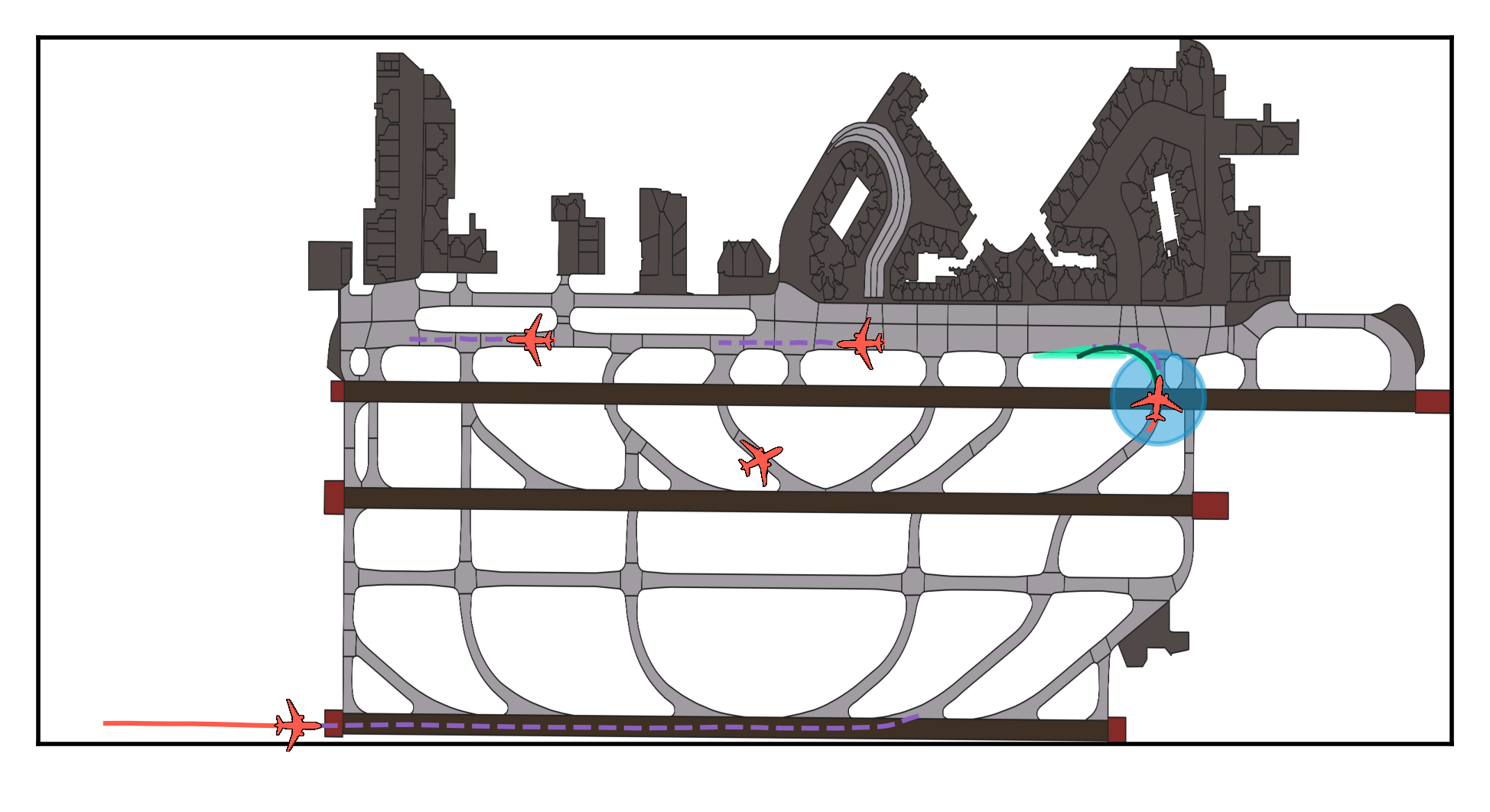

My sweet spot is the boundary between state-of-the-art and esoteric. I like to call it avant-garde.